Method Effectiveness

Before selecting what methods we will use to collect data, we have to ensure that the methods we choose are effective in collecting accurate and trustworthy information. We therefore need to be able to consider what makes methods effective and what steps we can put in place to ensure they are more suitable within the data collection process.

So what makes data collection methods effective?

There are many different methods that can be used to collect data on a factor(s). These methods are also constructed differently and have different benefits and limitations from other methods. For example, the benefits and limitations of a Performance Profiling Wheel are different from the benefits and limitations of the Bleep Test. This is because each method has different positives and negative elements within what is referred to as the PARV(M) acronym for assessing method effectiveness. Use this acronym when considering the selection and utilisation of a method by asking yourself the following:

1) Is my method of data collection PRACTICAL? Is it easy to carry out? Does it cost any money? Are the results easy to interpret?

2) Is my method APPROPRIATE? Does my method actually measure the sub-factor I am interested in? Is it performance related?

3) Does my method produce RELIABLE results? Can I trust the data? Are the protocols for this method clearly defined and administered similarly elsewhere?

4) How VALID are the processes involved in completing this method? Can I defend the process I went through?

5) Does my method have the potential to be MEASURABLE? Is the method a permanent record that can be looked back upon to gauge improvements?

Each time you select a method to collect data, you must answer the above questions. Let’s look at them in more detail.

TASK: as you go through the example answers, can you consolidate your previous knowledge and

a) Identify the command word being used?

b) Identify a common answer structure for each sentence within the paragraph?

1) PRACTICALITY

When selecting methods, you need to consider if they are practical:

a) Are they EASY to COMPLETE?

HOW: easy to SET UP? Does it require SPECIALIST EQUIPMENT? Is it SIMPLE to COMPLETE? Does it have to be completed at the TRAINING GROUND?

E.g. ‘The General Observation Schedule is practical as it is easy to complete due to it simply being a table of skills and techniques being clearly compared to their levels of effectiveness. This means it should be easy for me to fill out the table correctly and provide me with accurate data.’

b) Is it EASY to INTERPRET the data?

HOW: is the data TICKS/TALLIES on a page? Is the data in DIFFERENT COLOURS? Is the data TRUE or FALSE? Is the data NUMBERS on a SCALE?

E.g. ‘The Performance Profiling Wheel is practical as it is very easy to interpret because I use different colours to represent my abilities and that of a model performer. This means it is easy to make comparisons between my levels and the model performers which can lead to me correctly identifying my strengths and weaknesses and creating an appropriate development plan.’

c) Does your method require any SPECIALIST EQUIPMENT, COST ANY MONEY or take a lot of TIME?

HOW: do you have to WATCH a performance back?

E.g. ‘The Mental Toughness Questionnaire is practical because it does not take a lot of time to complete as it is simply a case of reading statements and seeing if I think they are true or false. This means I will not feel rushed, take my time and fill the questionnaire out seriously with well thought out responses to provide me with accurate data.’

2) APPROPRIATENESS

When selecting methods, you need to consider if they are appropriate.

a) Is my method relevant to the FACTOR under consideration and/or my ACTIVITY?

HOW: is my method a WORLDWIDE RECOGNISED one? Does SCIENTIFIC RESEARCH evidence it collecting data on my sub-factor? Is the processes relevant to the DEMANDS OF MY ACTIVITY?

E.g. ‘The SCAT Test is appropriate for collecting data on the mental factor as it has been scientifically proven to be an appropriate measure of a performers anxiety levels. This means that so long as the protocols are followed correctly, I can receive a valid measurement of my anxiety levels within my sport and which can lead to me trusting and using this information to select appropriate approaches to help me improve this area.’

3) RELIABILITY

When selecting methods, you need to consider if they give a true reflection of your performance levels. In doing this, you need to ask yourself:1) Would I get the same results every time I used this method?

2) Does my data therefore provide an accurate picture of my performance?

In trying to answer these questions, think:

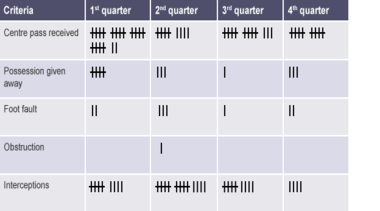

a) How MUCH DATA did I collect?

HOW: did you carry out the method over SEVERAL games? Did you get ALL OF THE DATA?

E.g. ‘The General Observation Schedule was made more effective as I ensured I boosted the reliability of my data by having my partner observe me over three games. This meant that the risk of a fluke performance was minimised as I collected a large amount of data to ensure that the picture I built up of my performance was an accurate one. This lead to me being able to correctly identify my strengths and weaknesses and creating an appropriate development plan.’

b) Does the method provide PROTOCOLS to follow?HOW: is this method done the SAME WAY every time?

E.g. ‘The Standing Broad Jump Test is a reliable one because it is a standardised test providing strict protocols on how to complete it. This means that this method is completed the same way every time and provides more trustworthy results and comparisons between initial tests and re-tests.’

c) What TYPE OF DATA is collected?HOW: does the method collect OBJECTIVE/SUBJECTIVE data? Could BIAS OPINIONS cloud results? How HONEST were responses? Did you use it in CONJUNCTION with VIDEO ANALYSIS?

E.g. ‘It is important to use the Disciplinary Record in conjunction with Video Analysis to boost the reliability of your data. This is because having games recorded and watched back on video can overcome the problem of your partner missing something within a fast paced environment during the game as they can slow the game down, pause it and/or rewind it to ensure nothing is missed and that your data provides an accurate picture of your ability to control your anger.’

VALIDITY

When selecting methods, you need to consider how correct the collection processes are:

a) What LEVEL OF OPPONENT did you play against?

E.g. ‘The General Observation Schedule provided me with valid results because I played against opponents who were the same ability as me. This lead to me playing my natural game and gave the correct impression of my typical performance in league games which ensured the strengths and weaknesses identified were correct.’

b) What LEVEL OF KNOWLEDGE did the recorder have?E.g. ‘The results from my Focused Observation Schedule were made more valid because I asked my teacher to read over my answers. This meant I had a knowledgeable other checking that my partner had completed my observation schedule correctly and boosted the validity of my results. This lead to me being more confident that I was about to create a development plan that focused on my actual weaknesses.’

c) Do you UNDERSTAND the questions/statements/criteria?E.g. ‘The results produced in the Sports Emotion Questionnaire were valid because the questions were easy to understand. This meant I knew exactly what the question was asking of me and I provided the correct answer as there was no misunderstanding on my part.’

d) Were the PROTOCOLS followed CORRECTLY?E.g. ‘It is important that you follow the protocols correctly when setting up the Bleep Test if you want to produce valid results. This is because if you conduct the Bleep Test on a flat surface rather than a hill, you rule out the possibility of you tiring due to something other than your heart and lungs nearing exhaustion meaning you can better defend your results.’

MEASURABLE

When selecting methods, you need to consider whether they are PERMANENT RECORDS OF DATA.

E.g. ‘The POMS Test was measurable as it was a permanent record of data. This meant it was easy for me to compare results from my re-tests to my baseline measurement and chart any progress I had made. This lead to me making alterations to my development plan when I noticed I hit a plateau as I made my sessions more challenging to stimulate improvements.’

Remember your task? Here are your answers:

a)

Practicality: all explain.

Appropriateness: explain.Reliability: evaluate, explain, analyse.Validity: explain, evaluate, explain, analyse.Measurable: explain.b)Explain: the first sentence was a statement that made reference to what part of PARV(M) was under consideration and how. The second and/or third sentences then proceeded to justify why this part made the method effective.

Analyse: the first sentence stated what the important consideration was in reference to PARV(M). The second and/or third sentences then proceeded to justify, in-depth, why this part was important and how it makes the process of data collection more robust/suitable/effective.

Evaluate: the first sentence made a statement containing an evaluative term such as ‘more’ and made reference to the aspect of PARV(M) under consideration. The second and/or third sentences then justified why this value was given with further evaluative terms/phrases being mentioned.

NEXT STEPS:

It is important that you always go through the PARV(M) acronym when assessing how effective a method is. You can go through this process when answering explain, analyse and/or evaluate data collection method questions.

Let’s now look at the different methods used to collect data across the 4 factors starting with the mental factor.